Project Overview

Key Features

Automatic Object Detection

Leverage COCO-trained models to automatically identify and segment objects within uploaded images, eliminating manual masking.

Diffusion-Based Inpainting

Utilize advanced diffusion models to generate photorealistic content that seamlessly fills selected regions while maintaining context.

Interactive Web Interface

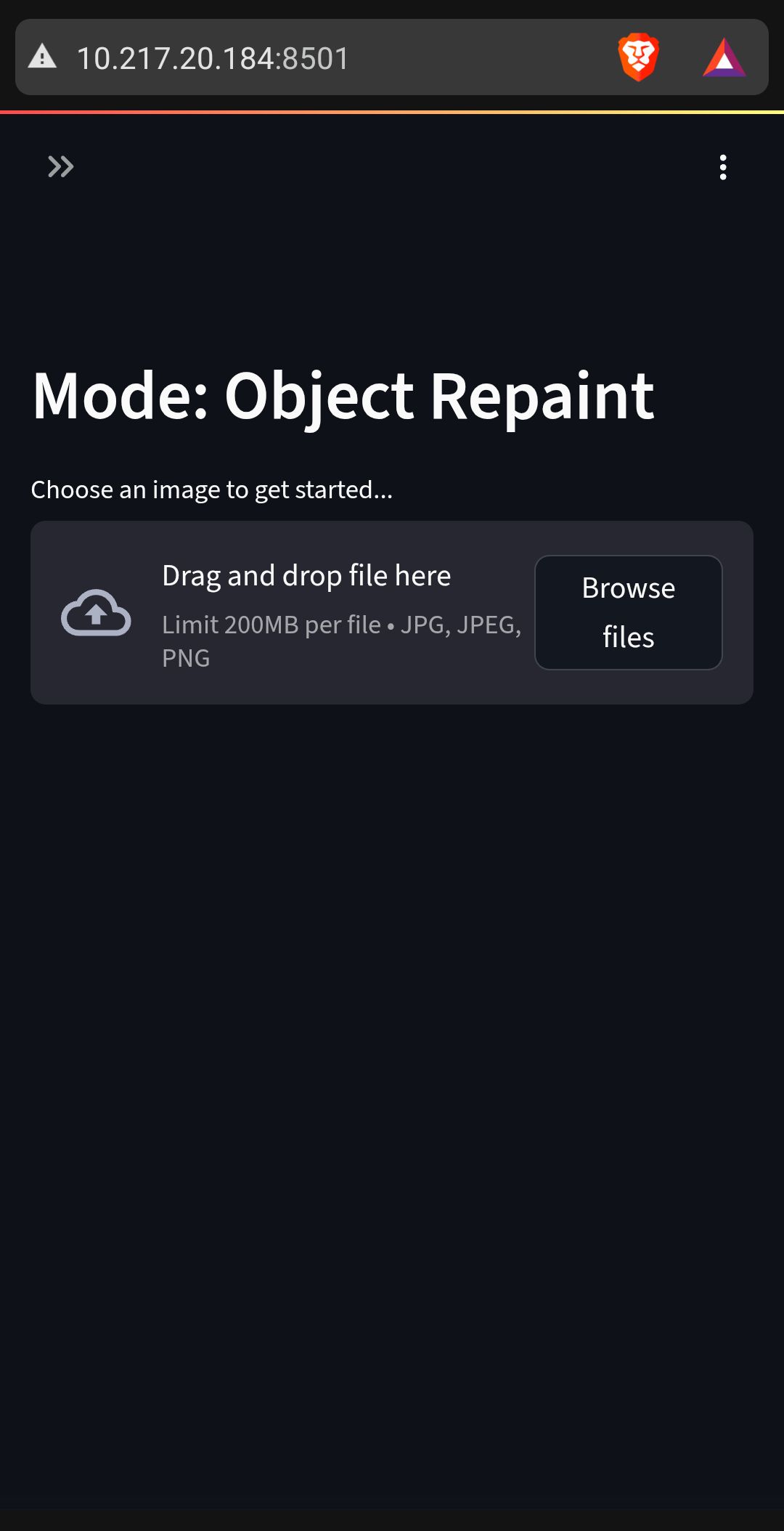

Built with Streamlit for an intuitive, responsive interface that works seamlessly across desktop and mobile devices.

RESTful API Backend

FastAPI-powered backend providing robust, scalable image processing services with clear API endpoints.

How It Works

Architecture Overview

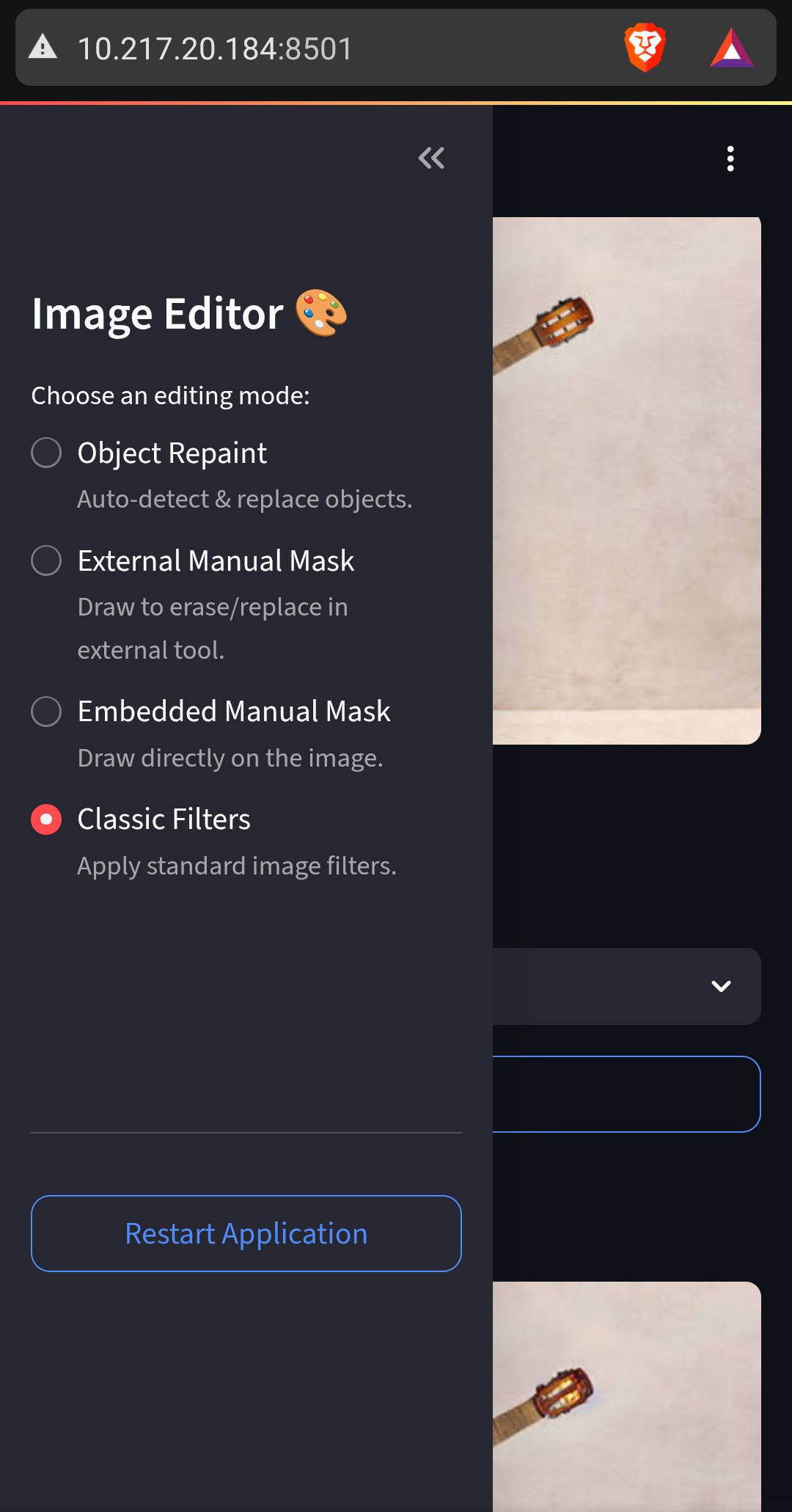

Frontend: Streamlit

Interactive web application providing user-friendly interface for image upload, object selection, and result visualization.

Backend: FastAPI

High-performance REST API handling image processing requests, model inference, and result generation.

Object Detection

COCO-trained detection models automatically identify and segment objects within images for precise masking.

Inpainting Engine

Diffusion-based generative models create realistic content to fill masked regions while preserving image coherence.

Processing Pipeline

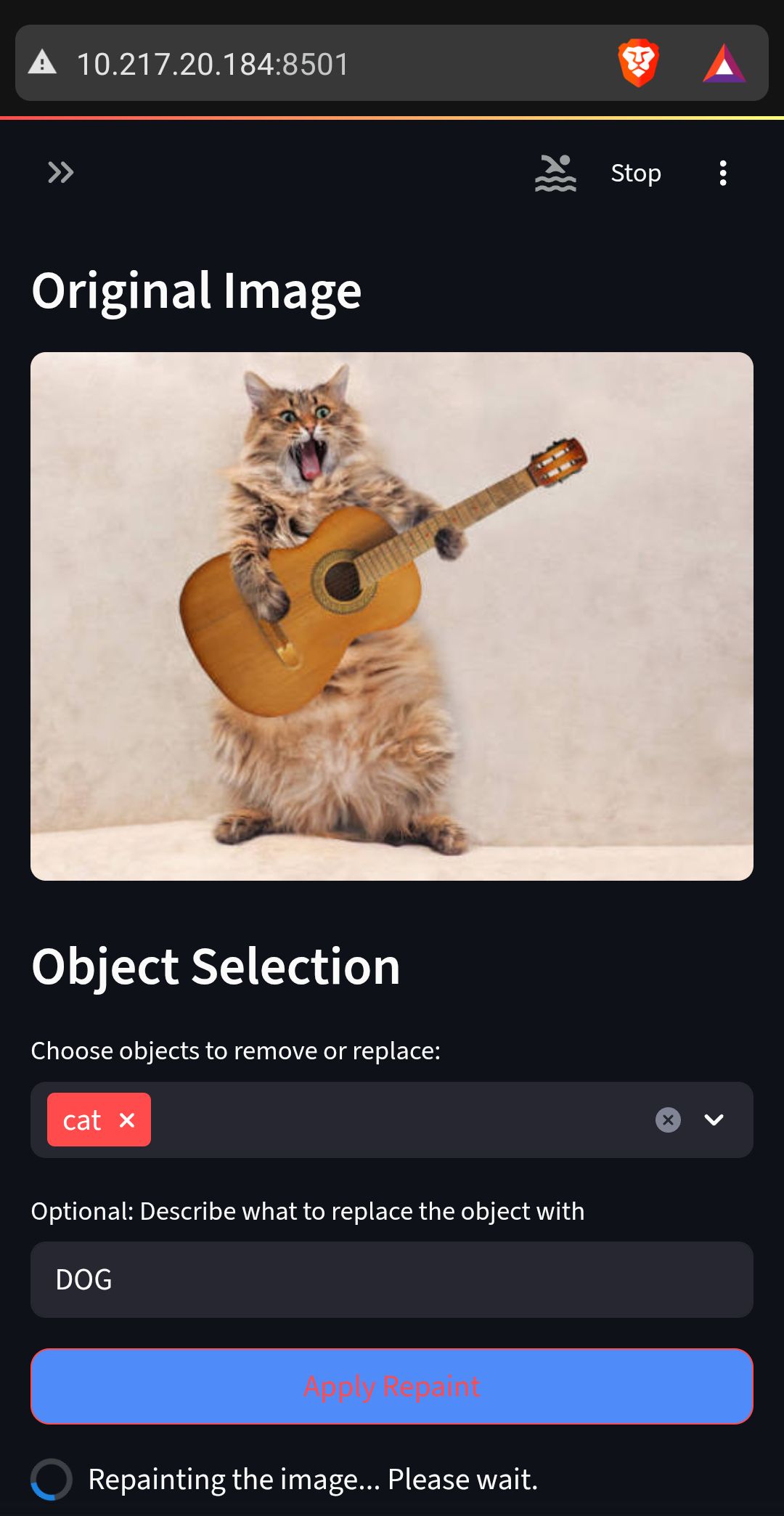

- Image Upload: User uploads an image through the Streamlit web interface

- Object Detection: Backend automatically detects and segments all objects using COCO categories

- Object Selection: User selects which detected objects to remove or modify

- Mask Generation: System creates precise binary masks for selected regions

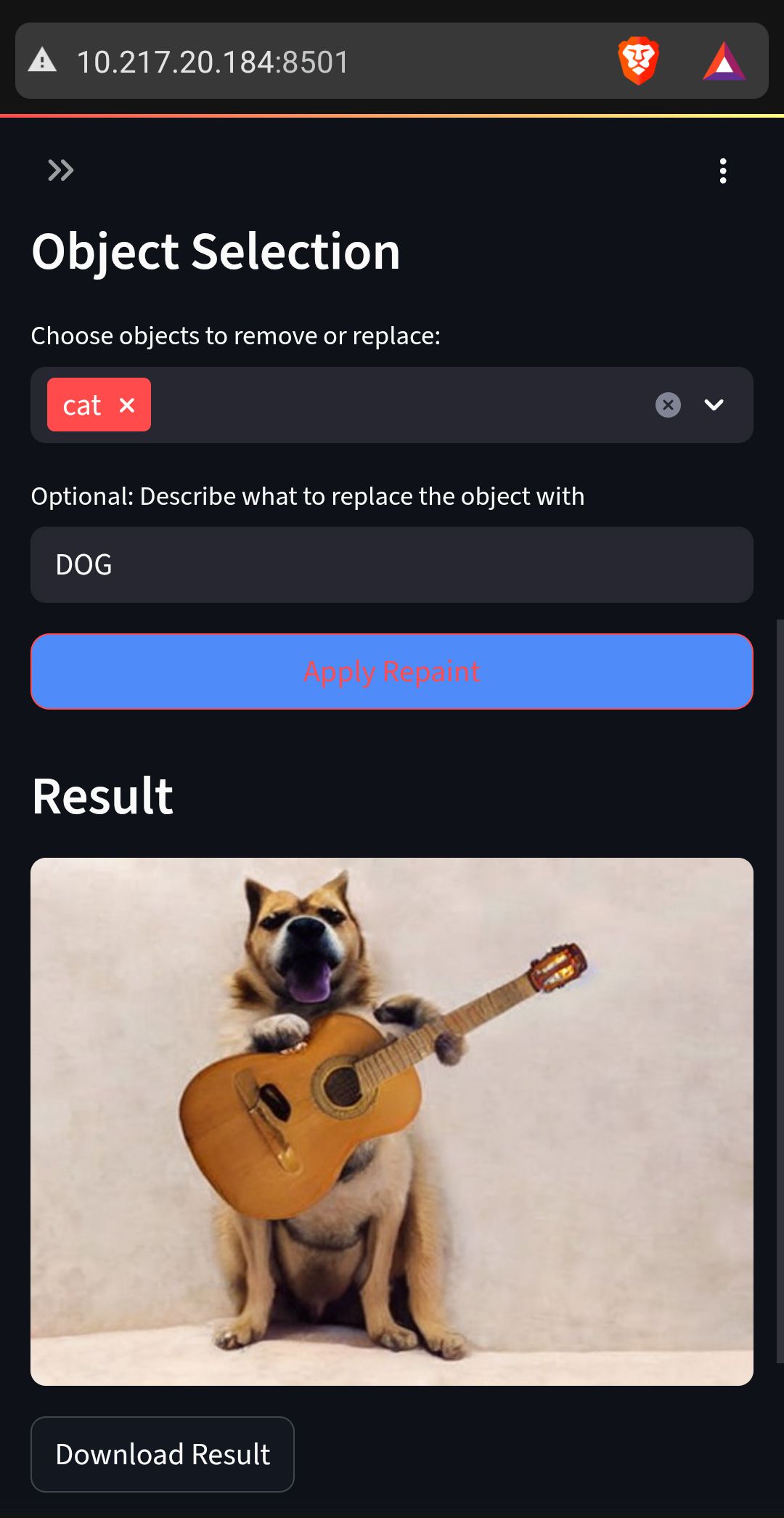

- Inpainting: Diffusion model generates realistic content to fill masked areas

- Result Display: Multiple generated results displayed for user selection and download

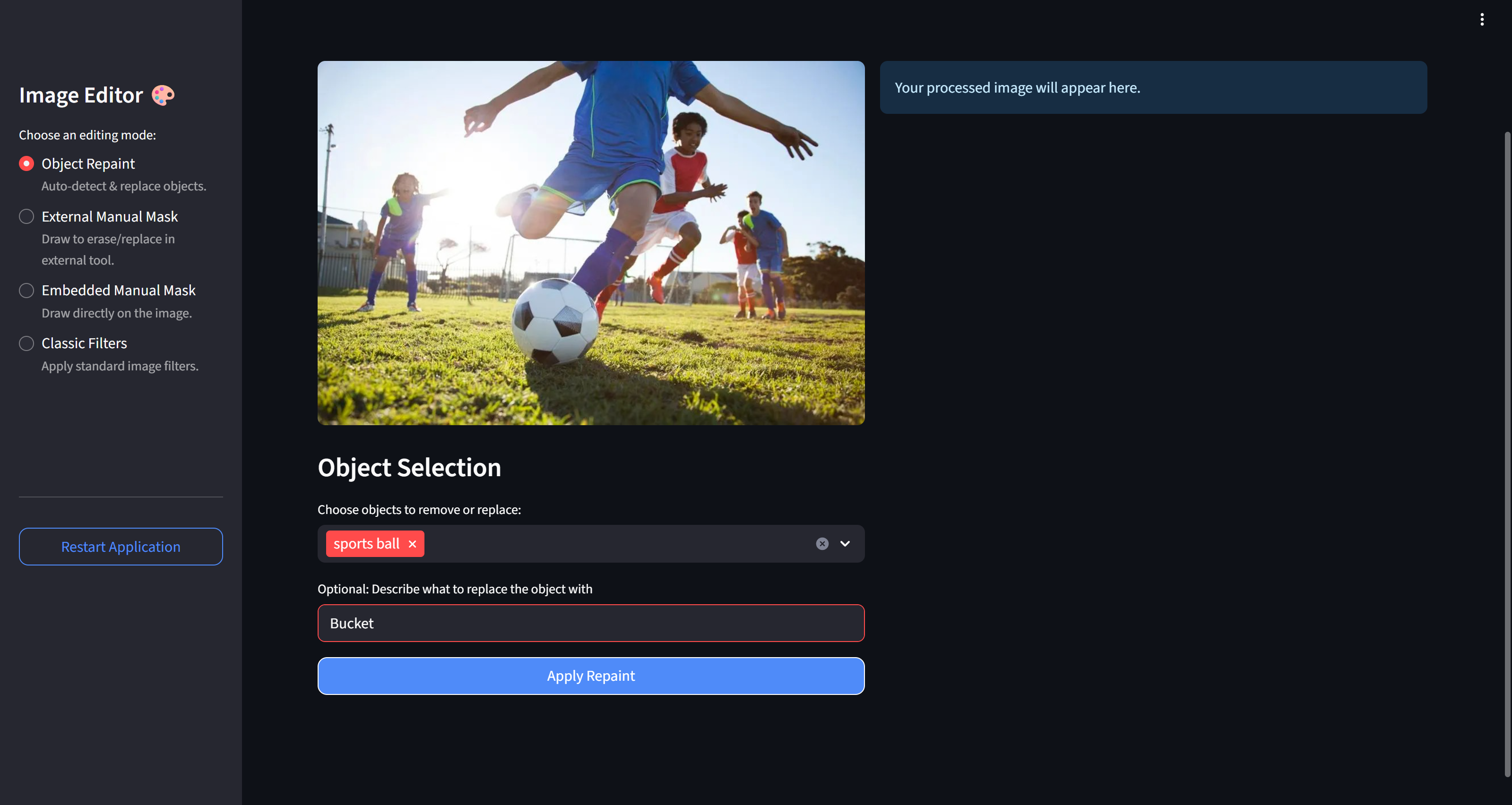

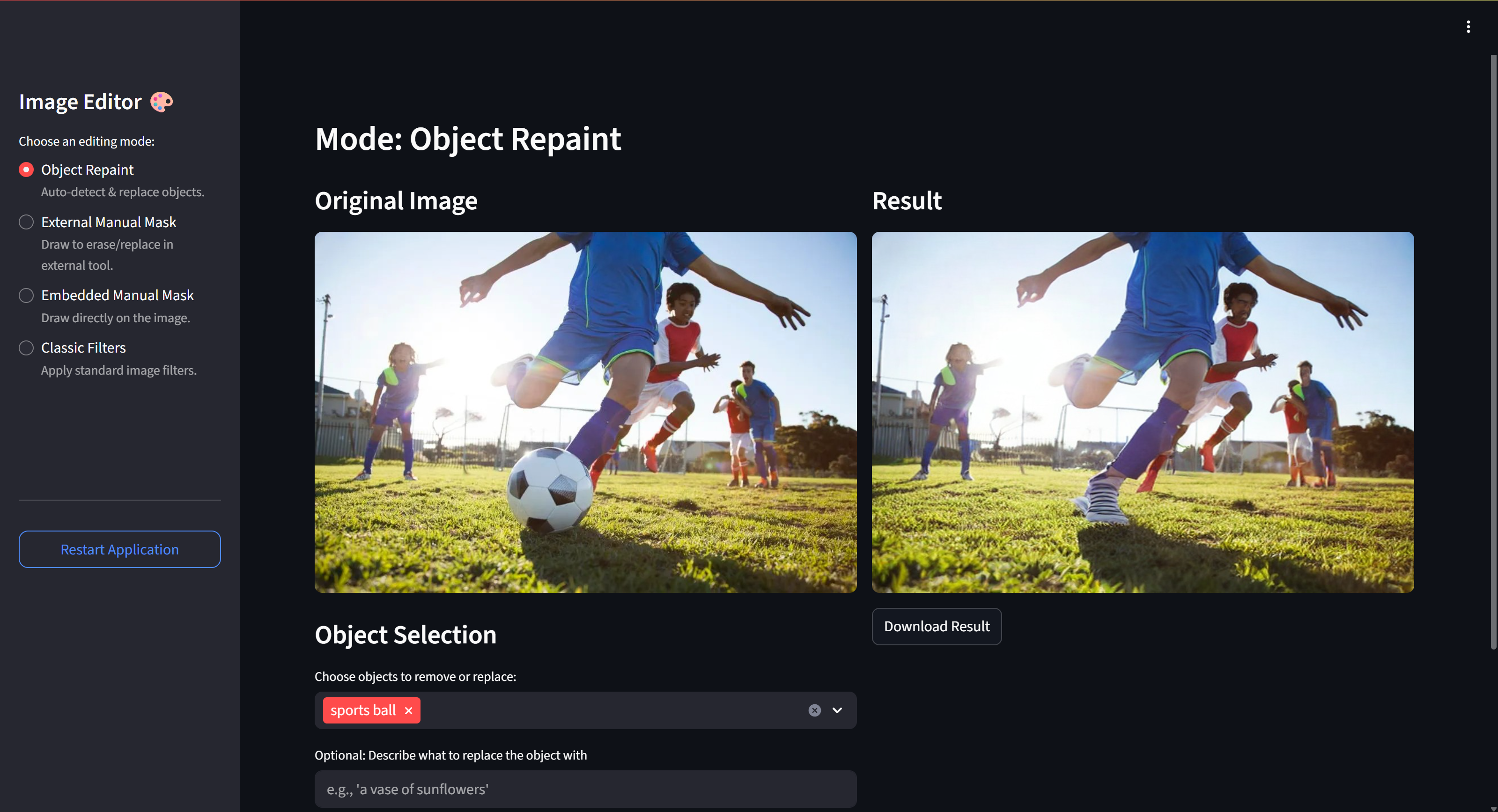

Demo Gallery Interactive

Desktop Interface

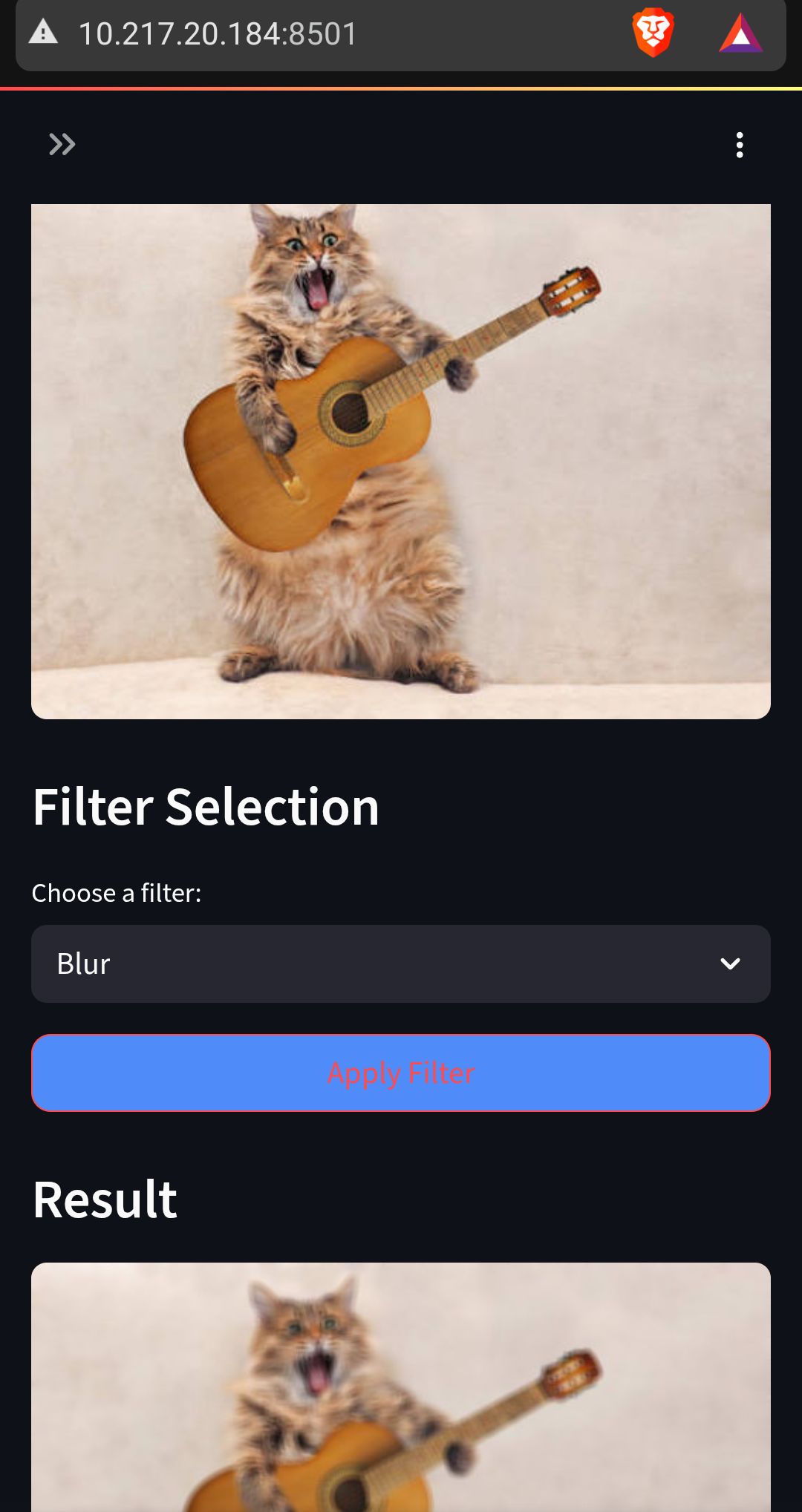

Mobile Interface

Classic Filters

Technology Stack

Core Technologies

AI/ML Models

Development Tools

Setup & Installation

Prerequisites

- Python 3.9 or higher

- CUDA-capable GPU (recommended for faster inference)

- Conda or Python virtual environment

- 8GB+ RAM (16GB recommended)

Installation Steps

1. Clone Repository

git clone https://github.com/Mahanth-Maha/Inpainting.git

cd Inpainting2. Create Virtual Environment

conda create -n inpaint python=3.9 -y

conda activate inpaint3. Install Jupyter Kernel (Optional)

conda install -c anaconda ipykernel -y

python -m ipykernel install --user --name inpaint --display-name "inpaint"4. Install PyTorch

Visit pytorch.org for platform-specific instructions, or use:

# For CUDA 12.8

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu1285. Install Dependencies

pip install -r requirements.txt6. Configure Environment

cp env_template .env

# Edit .env file with your configurationsDefault .env settings:

ANNOTE_MAPPING_FILE_PATH="models/annotations/coco_category_mapping.json"

DEBUG_LEVEL="INFO"Running the Application

Start Backend Server

In Terminal 1 (keep running):

conda activate inpaint

cd src/backend

uvicorn main:app --reloadStart Frontend Web App

In Terminal 2 (keep running):

conda activate inpaint

cd src/frontend

streamlit run app.pyNote: The web app will automatically launch in your default browser at http://localhost:8501

Usage Guide

Basic Workflow

- Launch Application: Ensure both backend and frontend servers are running

- Upload Image: Click "Browse files" or drag-and-drop an image into the upload area

- Wait for Detection: System automatically detects all objects in the image

- Select Objects: Choose which detected objects you want to remove or modify

- Optional Text Prompt: Add custom text prompts to guide the inpainting generation

- Generate Results: Click "Inpaint" to generate multiple result variations

- Review & Download: Browse generated results and download your preferred output

API Endpoints

POST /detect

Detects objects in uploaded image, returns bounding boxes and categories

POST /inpaint

Performs inpainting on selected regions, returns generated images

POST /filter

Applies classic image filters (blur, sharpen, edge detection, etc.)

Feature Highlights

Multi-Object Selection

Select multiple objects simultaneously for batch removal or modification, saving time on complex edits.

Custom Prompts

Guide the inpainting process with text prompts to replace removed objects with specific content.

High-Resolution Support

Process high-resolution images while maintaining quality and detail in generated regions.

Filter Library

Access classic image processing filters for quick edits beyond AI-powered inpainting.

Resources & Links

Future Enhancements

- Custom Model Training: Support for fine-tuning inpainting models on domain-specific datasets

- Batch Processing: Process multiple images simultaneously for efficient workflow

- Advanced Masking: Manual brush-based masking alongside automatic detection

- Style Transfer: Apply artistic styles to inpainted regions for creative effects

- Video Inpainting: Extend capabilities to video object removal and temporal consistency

- Cloud Deployment: Scalable cloud-based service for wider accessibility

Contact & Support

Project Contributors

Get in Touch

For collaborations, research inquiries, or general questions

Mahanth Yalla

Email: mahanthyalla [at] iisc.ac.in

Website: mahanthyalla.in

M.Tech Artificial Intelligence,

Indian Institute of Science, Bengaluru

Md Kaif Alam

Email: kaifalam [at] iisc.ac.in

M.Tech Artificial Intelligence,

Indian Institute of Science, Bengaluru